Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

CSML Apps are a great way to extend the capabilities of your CSML chatbot by integrating other products with your bot without writing any additional code. CSML Studio provides a number of ready-to-user integrations (over 50 and growing!), that make it possible to integrate your chatbot with the most popular services and applications on the market.

CSML Studio integrations are ready to use. To configure an integration, you only need to configure the required credentials for this app (but some don't require any authentication!). The app's description will have the information on how to get setup.

For instance, if you are looking to add some fun Gifs to your bot, you can try our Giphy integration. To get started, go to Apps > Available Integrations > Giphy.

Simply click on Install, and you are done! Some apps require credentials or API keys, but this one does not. If that's the case you are prompted to add environment variables upon installation.

Then, to use the app in your code, you can use it like this:

CSML provides a way to execute external code in any language of your choice via a Foreign Function Interface, represented by the App() keyword.

Here are some ideas for CSML integrations:

file tickets to , or

create leads in , ,

CSML Studio allows you to use your own pre-trained Natural Language Processing (NLP) service directly in your CSML chatbots with very little configuration. You can easily setup your favorite NLP provider in AI & NLU > NLU Configuration:

By default, all bots run in Strict Mode, where the input needs to exactly match one of the given rules to trigger a flow.

When a NLP provider is configured, all text events will be sent to the NLP provider and returned either as a payload event if an intent is found, or untouched if no intent is found. When an intent is found, the value of the payload will be intent:nameOfIntent.

No matter what NLP provider you pick, any event that passes through this process will have the following properties:

A few additional properties are also set in the resulting event:

store data on Airtable, Google Sheet, Amazon DynamoDB

upload files to Box, Google Docs, Office 365

analyze text with SAP Conversational AI, Dialogflow, Rasa

book meetings on Google Calendar, Hubspot, Calendly

trigger events in Zapier, IFTTT, Integromat

generate QR codes, format documents, upload images...

and many more!

There are 2 ways you can augment your CSML chatbot by connecting it to other services: authoring your own custom apps, or installing ready-to-use CSML Integrations.

The Workplace Chat channel offers more or less the same features and limitations as the Messenger channel, as they are built on top of the same platform. However, there are some key differences, in how they are installed, configured and what specific features and limitations they offer.

CSML Studio supports Slack as one of its channels. Slack is a very popular option at startups and schools, and is widely used as a "ChatOps" solution by tech teams.

Create the perfect Callbot with CSML Studio and Twilio Voice!

Microsoft Teams is a popular channel for enterprise and team use. It allows a diversity of rich messages (images, buttons, carousels...) and is widely used in many of companies worldwide. Chatbots for Microsoft Teams benefit from a rich user experience, although setting up a bot for Microsoft Teams is a slightly complex task.

Due to new limitations by Meta, it is currently impossible to add a new Messenger channel from CSML Studio.

Messenger is one of the most popular chat platforms, with over 1.3B users worldwide. It is also one of the platforms that democratized the use of chatbots. It has a pretty broad set of APIs and allows for some very rich message templates, which gives it a nice user experience. If you are looking to build a publicly-accessible chatbot, Messenger is definitely a solution to look at!

Amazon Alexa is one of the main platforms for launching Voice Bots. Using CSML Studio, you can easily deploy powerful Alexa Skills on any Alexa-activated device in the world. Let's try and walk you through your journey as a CSML Voice Bot developer!

event._nlp_provider: contains details about the NLP provider integration

event._nlp_result: contains the raw response from the NLP provider

event.text: contains the original text input

You can now use the event by matching a found intent with a flow command, by setting that intent as one of the accepted commands for a flow, using the AI Rules feature.

Alternatively, you can also decide to match buttons or other actions within a flow with the found data. For instance, you can use NLP to detect a YES_INTENT and match it without having to list all the possible ways to say "yes" in the button's accepts array.

CSML Tip: except in very specific scenarios, you should not map your NLU provider's default or fallback intent to a CSML flow, as this would result in a behavior where every input that is not a detected intent triggers that flow. Instead, fallback intents should continue any currently open conversation or automagically fallback to the default CSML when needed.

// if an intent is found:

event.intent = {

"name": "..." // contains the name of the intent, same

"confidence": "..." // the confidence score that this is the right intent

}

// or, when no intent is found:

event.intent == null

// if other intents match the request:

event.alternative_intents = [ Intent {}, ... ]

// when entities are found, event.entities containes a map of found entities

event.entities.ENTITY_NAME = [

{

"value": "the value",

"metadata": {

// ... additional metadata as returned by the NLP provider

}

}

]

// when this information is known, the processing language code

event.language = "en"start:

say Question(

"Do you like chocolate?",

buttons=[

Button("Yes", accepts=["intent:YES_INTENT"]) as btny,

Button("No", accepts=["intent:NO_INTENT"]) as btnn,

]

)

hold

if (event.match(btny)) say "I knew it!"

else if (event.match(btnn)) say "I think you are lying..."

goto endstart:

do gifs = App("giphy", action="gif", query="hello")

say Image(gifs[0])

goto endCSML Studio currently supports the following NLP providers:

Dialogflow (read our blog post)

SAP Conversational AI (formerly Recast.ai)

Amazon Lex

Microsoft LUIS

Rasa

Wit.ai

IBM Watson

Custom Webhook

Please refer to each provider's documentation for more information on how to generate credentials. CSML Studio supports the most common and secure way of connecting to these providers.

If your favorite provider is not in this list, please reach out to .

You can also add a custom NLP engine by using the custom Webhook integration. This is especially useful if we don't currently support your NLP provider of choice or if you are running your NLP services on premise.

To use the Webhook integration, simply provide a URL that we can send a POST request to, with the following body:

The URL may contain query parameters, for example with an API Key for authentication purposes.

When called, this endpoint must return the response in the following form:

You can test this endpoint directly on the configuration page.

To uninstall a Workplace Chat channel, you need to delete the channel both on Workplace, and on CSML Studio. Only removing the channel on CSML Studio will leave the chatbot visible to Workplace users.

To remove the bot from Workplace, visit the Workplace admin panel (under Integrations) and find your bot in the list of available bots.

Click on Uninstall then confirm. Your bot will immediately be removed from Workplace chat, however users that already talked to the bot will still be able to find their conversation history.

After this is done, you can safely remove the channel from CSML Studio as well.

To create a CSML Studio Assistant, click on the channels menu then on create new channel.

Click on Assistant, add a name and a description (you can change it later), then click on Submit.

Your Assistant is now created and ready to use! There are two ways to use your Assistant: either by visiting the link at the top, or by adding the widget link for integrating it into your existing website. It works on mobile too!

CSML (Conversational Standard Meta Language) is an open-source programming language dedicated to building chatbots.

It acts as a linguistic/syntactic abstraction layer, designed for humans who want to let other humans interact with any machine, in any setting. The syntax is designed to be learned in a matter of minutes, but also scales to any complexity of chatbot.

CSML handles seamlessy and automatically short and long-term memory slots, metadata injection, and can be plugged in with any external system by means of or , as well as consume scripts in any programming languages using built-in parallelized runtimes.

To learn more about CSML, you can read this .

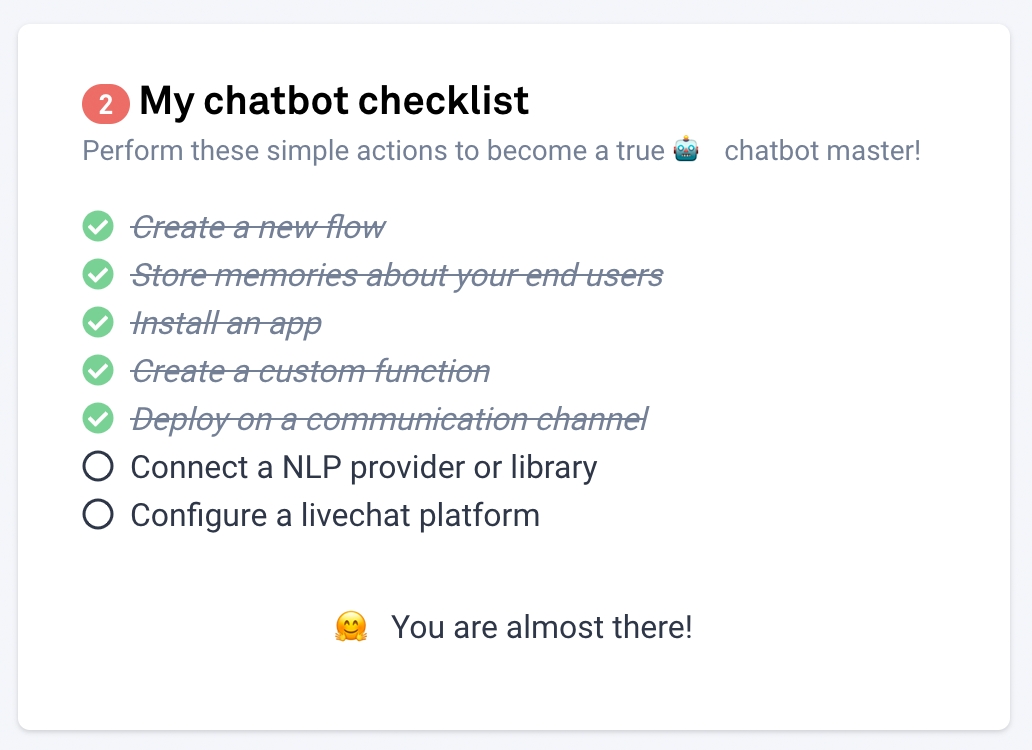

Every time you login to visit your chatbot, you will be greeted by your chatbot's Dashboard. It gives you a birds-eye view over how you are doing with your chatbot so far, and provides you with some hints on how you can improve your chatbot.

Here is what it looks like:

You will find 2 different panels: the Assistant and the Checklist. Let's see what each panel does!

In the AI Rules section of CSML Studio, you will find a way to configure flow commands for any of your flows. This section makes it easy to see in one single screen all the rules that govern the triggering of any of your flows!

Commands are words or sentences (or intents, when NLU is configured) that will trigger a given flow. For example, in the example below, if a user says "Hi", no matter where they are in the bot, they will be redirected to the Welcome flow:

You can have as many AI Rules as you want on as many flows as you want. The commands are case-insensitive, so for example "Hi", "hi", "HI" and "hI" will all trigger the Welcome

You can find similar configuration settings between and Workchat channels. However, Workchat being a professional, internal-only communication channel, there are some additional options you can play with.

The Broadcast feature allows you to trigger any flow for any given user or list of users. To use the broadcast feature, click on Send broadcast to open a configuration popup.

Due to new limitations by Meta, it is currently impossible to add a new Instagram channel from CSML Studio.

To make it really easy to publish your chatbot, CSML studio provides a full-featured webapp so that you can easily integrate your chatbot into any existing project. This is the simplest way to deploy your chatbot at scale!

Whatsapp is one of the most used messaging platforms in the world, replacing SMS in many cases. Setting up a Whatsapp channel is slightly more complex than some other channels but it can be a great way to get in touch with your audience!

{

"text": "TEXT_TO_ANALYZE"

}{

// intent can also be null if no intent is found

"intent": {

"name": "NAME_OF_INTENT",

"confidence": 0.84

},

"alternative_intents": [

// ... other matching intents

],

"entities": {

"someEntity": [

{

"value": "somevalue",

"metadata": {

// ... any additional information about entity

},

},

// ... other values for the same entity

],

// ... other entities

}

// ... any additional data: sentiment analyzis, language...

}The easiest way to develop CSML chatbots is by using the CSML development studio, using a sample bot available in your library or by creating your own.

Each bot gives you a full access to the CSML framework - a set of specialized tools to make and customize your chatbots : code editor, chatbox, apps, functions and files library, analytics and channels.

You can create your own conversations by modifying an existing flow or creating your own.

A flow is a CSML file which contains several steps to be followed during a conversation with a user. The first instructions have to be placed in the start step, then you can move from a step to the next one using goto stepname, and finish the current conversation using goto end. Each step contains instructions on how to handle the interactions within the step: what to say, what to remember, where to go...

For the bot to say a sentence, you just need to use the keyword say followed by the type of message you want to send.The keyword say allows:

to display a message: text, questions, urls, components (image, video, audio, buttons…)

to simulate behaviors, such as waiting time (Wait) or message composition (Typing)

Like a human, a chatbot is supposed to understand and react to messages sent by the user.

In a conversational logic, CSML allows the chatbot to wait for a user answer using the keyword hold, and interpret the expected user input (called event) to trigger an action.

Here is an example of a simple interaction where the bot is asking if the user likes guitar and waiting for two specific answers in order to trigger actions.

Memory is essential in the CSML logic. When two people are chatting, they constantly memorize and update information to be reused in future discussions. CSML provides two types of variables: local variables, with a very short life cycle, and persistent variables, called memories.

The memories are assigned as followed use the keyword remember and can be reused in every step or in a future conversation, while the local variables are executed within one step, assigned with the use keyword.

You can output any variable into a string value with the double curly braces.

You can select an existing integration in the apps library or add your own function coded in any language. Find a few examples in our GitHub repository and how to use functions in our documentation.

Once your function is uploaded, you can test it and call it in a CSML flow. Read more about functions and apps here!

Once your chatbot is ready to chat with your users, you can select the channel you want to connect on. For example, using the CSML Studio you can connect your bots to Slack, Messenger, Whatsapp and other channels, or use the CSML Client API to receive requests.

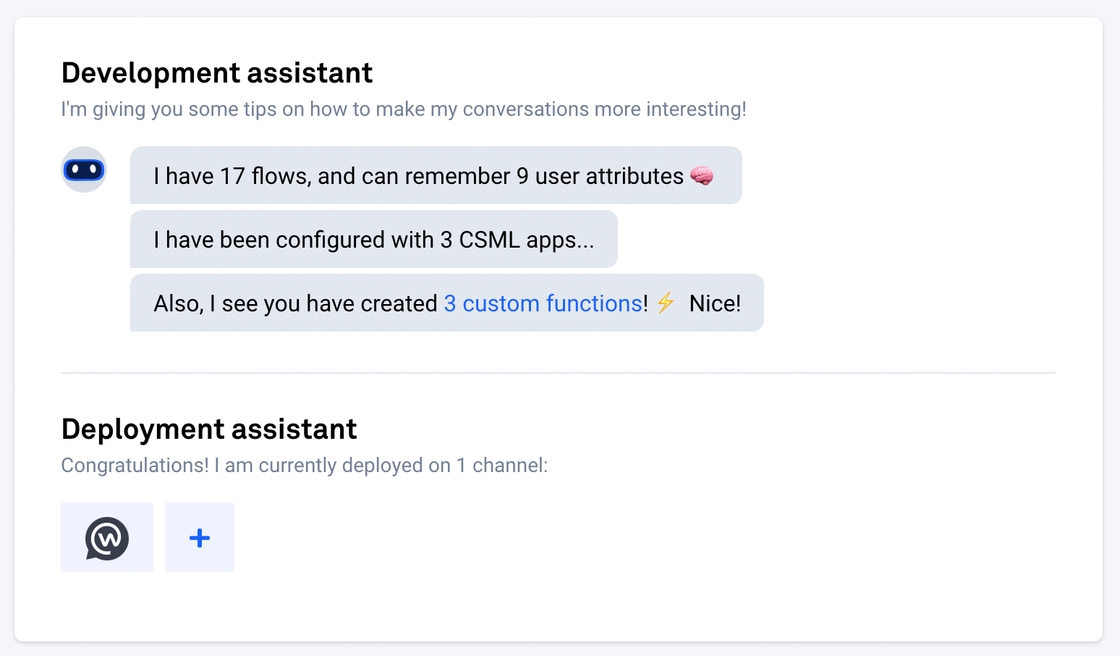

The Assistant, taking the form of a simple chatbot, will help you quickly identify the current status of your chatbot and give you hints into what you could improve to make an even better chatbot. There are 2 sections, corresponding to the 2 major phases of preparing a chatbot for production: development, and deployment.

The Development assistant will lead you to add more flows to your bot, remember more information about your end users and add more capabilities with the help of integrations and custom apps.

The Deployment assistant will help you add your chatbot to a communication channel or manage your existing channels.

The checklist will help you quickly identify areas where you can improve your chatbot. It tells you what is missing to make your chatbot as complete as possible in the form of a simple to-do list of what most good chatbots should be doing.

Make sure every item of the checklist is ticked, and you will be on your path to a great chatbot!

Another section that's very important is the Activity page.

This page, as the name indicates, gives you a high-level overview over your chatbot's activity in the past 3 months. If you are seeing a steady increase in usage, then you are probably doing things right. However, if your numbers are stalling or decreasing, perhaps you can take some action like sending a broadcast or adding new content?

We trust that you will find The Dashboard quite instructive in your path to create the greatest possible chatbot!

You can send a broadcast to a given user by using _metadata.id as their user_id with the broadcast API or the broadcast interface in the corresponding tab in the channel's settings. It requires that the user has already interacted with the chatbot before (you can not simply send a message to a user's @username).

You can add custom metadata as well!

This feature can also be used in conjunction with any Preprocessing or NLP configured in your bot. For instance, if after going through a NLP service, an intent is detected, the intent name can be used as a command for any given flow (with the intent: prefix, i.e intent:myIntentName). For instance, this is how an intent called DemoContext would be handled with AI Rules:

Under Flow, select the flow you want to trigger

Under Targets, list the emails (one per line) you want to receive the broadcast

Under Context, you can optionally add any additional context that you want to set in the _metadata object for this broadcast

Once you hit Send, the broadcast will be sent immediately.

If you already have custom code that you would like to use in your chatbot, you can import it directly as a custom app in CSML Studio and use it without any change.

In CSML Studio, apps are run by default on AWS Lambda, which ensures maximum availability, scalability and security by isolating your code in separate containers. The supported runtimes are currently:

Nodejs v10.x and 12.x

Java 8 and 11

Python 2.7, 3.6, 3.7 and 3.8

Dotnetcore 2.1 and 3.1

Go 1.x

Ruby 2.5 and 2.7

To get started with AWS Lambda compatible deployment package in any language, .

Deprecation notice: the original Fn() notation for calling Apps (formerly called Functions) in your CSML code has been replaced by the newer App() built-in as of CSML v1.5. Both notations will continue to work until CSML v2 is released, but this documentation will only reference the new App() usage from now on.

The easiest way to author custom apps in CSML Studio is to use the Quick Mode, which provides you with a code editor to create your app directly in the browser. There are several limitations:

only nodejs is supported (support for python will be added shortly)

quick apps can not contain more than 4096 characters

custom modules can not be added (however all native node modules are supported)

environment variables can not be configured

If you need more configuration options (other languages, bigger package size, custom modules), we recommend using the Complete Mode as explained below.

As an example, let's create a simple nodejs 12.x app that will return a random user from . This code performs a simple GET request to https://randomuser.me/api and returns the first user in the returned array. The code is available : download it or clone it to get started.

To test this program, simply run node test.js at the root of the project. You should see something like the following:

Select Add Custom App at the top-right corner of the apps page, which brings you directly to Quick Mode (nodejs). Simply copy-paste the content of index.js into the code editor, choose a name for your app, then click Save.

You can now use your app in your CSML Chatbot, like this:

With complete mode, a few extra steps are needed, as you will need to provide the code in a deployment package and upload it on the platform.

At the root of the project as downloaded from github, run the command zip -r9 ../randomuser.zip .. This will create a deployment package for your app, that you will be able to import in CSML Studio.

You MUST run this command inside the project directory. Zipping the folder itself produces an invalid deployment package.

In the sidebar, click on Apps > Add a new app. In the app creation page, upload your deployment package, give your app a distinctive and unique name such as getRandomUser, leave index.handler as your app's main handler and select nodejs12.x as the runtime.

You can leave the sections below as is for now; if your app took any argument or required any environment variable, this is where you would configure it.

Once you are done, simply save. You can now use your app in your CSML Chatbot as above.

As in every development project, apps have a processing cost associated to them. The more difficult the task is, the longer it takes to perform, the worse your user experience will be. Keep in mind that running apps will also increase the latency between the user's input and the bot's response. If possible, try to stick to native CSML code!

It is always a good practice to only return what you intend to use. For example, if you are interfacing with a product catalog, do you need to get all 3542 items in the catalog, or will the first 5 suffice? If you are retrieving a list of items, what properties from that list do you actually need?

Apps are limited to 30s of execution time. If it takes longer, the app will stop and return an error.

You can launch a specific flow (and step) instead of the default Welcome Flow when loading the Assistant by providing a ref query parameter in its URL.

To force the launch of a specific flow when opening the Assistant URL, use the following special syntax:

By default, the Assistant channel does not include any specific context about the conversation (see the _metadata object ). In some cases, it can be useful to load the Assistant (or Widget) with pre-existing metadata.

Some common scenarios:

the bot is loaded in a website where the user is already known: in that case, we may be interested in injecting the user's context (name, email...) inside the conversation.

the user is authentified on the parent website: in that case, we may want to inject an authentication token into the user's conversation for subsequent calls.

the same bot is used across several websites and we want to know which website the user is currently visiting: in that case we can inject a unique website identifier into the conversation.

The injected data is available in the _metadata global variable, available in every flow.

The code of the example above is:

To add custom metadata in your Assistant, simply add the encoded (with ) JSON string of the metadata you want to inject to the query parameters of the URL. The URL of the Assistant in the example above would be:

You can change the appearance of the Assistant by visiting the Design tab of the configuration panel. This lets you have even more control over the final experience for your end users, and match your own branding even better!

Many elements are configurable:

Chatbot avatar, header card color and background image

User bubble colors

Hiding the CSML branding

Disabling the speech input

In CSML Studio, every channel generates a set of API keys that you can use to perform various operations, such as managing the bot, sending broadcasts, getting information about current conversations... Channels are external connectors to your bot.

Every channel has different capabilities but all channels rely on the same API methods.

All calls are to be made to https://clients.csml.dev.

The API is versioned, and all calls must be prefixed by their version number. The most recent version is v1. The API is reachable on route prefix /api.

Hence, the complete endpoint for the API is https://clients.csml.dev/v1/api.

To ensure the stability of downstream systems, API requests are throttled. Each client (a set of API key/secret) can only send up to 50 reqs/sec. Over this limit, an error code is returned and the request will not be treated.

On the API channel exclusively, you can also register a webhook, which will be called with a POST request every time a message is sent by the bot to the end user. This is a good way to handle the outgoing messages in real time! The body of the webhook call is documented .

How to setup a Facebook Messenger channel in CSML Studio

There are several prerequisites to building a Messenger chatbot, which we will assume are already taken care of. Before we start, please make sure that you already have:

an active Facebook account

a Facebook page for your chatbot to be associated with that you are an admin of

a CSML chatbot ready to go

In CSML Studio, visit Channels > Connect new channel > Messenger. Click on Continue with Facebook to grant permissions to CSML Studio to connect bots to your chosen pages. This starts a standard Facebook login flow:

After this, simply select the page you want to link to the bot, and wait a few seconds.

Voilà! You can now search for your chatbot in Facebook Messenger (it will be listed under the page's name).

To create a webapp channel, click on the channels menu then on create new channel.

Click on Webapp, add a name and a description (you can change it later), then click on Submit.

After a few seconds, your webapp is created and ready to use!

There are two ways to use a webapp: either by visiting the link at the top, or by adding the embed link for integrating it into your existing website. It works on mobile too!

Workplace Chat is very, very similar to Messenger (except that it's grey). Please refer to Messenger's CSML documentation:

FeaturesMessage formatsBroadcasts can be sent without any limitation to any of your Workplace Chat users, with either their valid Workplace email address or ID as the user_id.

A sample _metadata for an incoming event will be similar to the following object:

The first thing you need is an instagram business account. Luckily, switching from personal to business account is a straightforward process: simply follow this guide.

Some common issues may come up and prevent you to correctly connect your chatbot to your Instagram account:

Make sure that your Instagram account has the Business type (not the Creator type). You can switch from a Creator to a Business account under Settings > Account > Switch to Business Account.

You also need to allow access to messages for the chatbot to be able to access them. .

You will also need a Facebook page linked to your Instagram Business account. You can easily create a new page or use an existing one, and connect it to your Instagram account by .

To connect your Instagram account to your CSML Studio chatbot, visit the Channels page, then click on Instagram to create a new Instagram channel. Then, follow the Login with Facebook flow, and make sure to select both the Instagram Business Account, and the Facebook Page it is linked with:

Also, make sure that all the requested permissions are checked:

After this flow, you will be presented with a list of your Facebook pages. Select the one that is linked to your Instagram page:

After a few seconds, you are redirected to a new page. It's done!

Ice Breakers provide a way for users to start a conversation with a business with a list of frequently asked questions. A maximum of 4 questions can be set. This feature is not available on Desktop!

To setup Instagram Ice Breakers, simply update the corresponding field in the channel's configuration page:

Each Ice Breaker must consist of a question and a payload. To remove all Ice Breakers, simply set this field to an empty array []!

is an open-source livechat platform, with an online SaaS version as well as a self-hosted version. If you are looking for a livechat solution that you can have full control over, or with a free plan if you want the SaaS version, we recommend Chatwoot!

To get started, go to your bot's Settings > Livechat then select Chatwoot as your livechat support platform.

You will need to retrieve:

the URL of your Chatwoot instance. If you are using chatwoot.com, it will be https://app.chatwoot.com

Chatbots are great, but sometimes your users will need some extra human help to go beyond what the chatbot can do. With CSML Studio, you can decide to bypass CSML and get your users to talk to a human agent instead! This is especially useful if the user needs help beyond what the chatbot can propose.

In order to get your users to talk to your human agents over livechat, you will need:

a CSML chatbot and channel setup

a supported Livechat platform

CSML Studio offers a number of other bot configuration options. Let's do a quick tour!

When you need to access some static values across all your users and all your flows, you should use Bot Environment Variables.

These values are injected in your conversations under the _env global variable and are accessible everywhere, without ever being injected in the current user's memory. They are also encrypted for enhanced security.

This is a perfect use case for API keys or secrets, as well as global configuration options.

# will redirect to the step NAME_OF_STEP in the flow called NAME_OF_FLOW

https://chat.csml.dev/s/abc12345678901234?ref=target:NAME_OF_STEP@NAME_OF_FLOW

# or, if no step is provided, the `start` step will be used

https://chat.csml.dev/s/abc12345678901234?ref=target:NAME_OF_FLOWcurl -X "POST" "https://clients.csml.dev/v1/api/conversations/open" \

-H 'content-type: application/json' \

-H 'accept: application/json' \

-H 'x-api-key: ${X-API-KEY}' \

-H 'x-api-signature: ${X-API-SIGNATURE}' \

-d $'{

"user_id": "some-user-id"

}'{

"has_open": true,

"conversation": {

"id": "43d5939f-4afc-4953-9e53-4c28b33cedd8",

"client": {

"channel_id": "dd446008-3768-41df-9be9-f6ea0371f920",

"user_id": "some-user-id",

"bot_id": "b797a3b6-ad8c-446c-acfe-dfcafd787f4e"

},

"flow_id": "31c2c4b0-05d6-44ce-9442-e87e9ae7e8a2",

"step_id": "start",

"metadata": {

"somekey": "somevalue",

},

"status": "OPEN",

"last_interaction_at": "2019-11-16T19:57:42.219Z",

"created_at": "2019-11-16T19:57:42.219Z"

}

}{

"_channel": {

"app_id": "10067197168686",

"name": "test-22032021",

"type": "workchat"

},

"email": "[email protected]",

"first_name": "John",

"id": "581595168271418",

"last_name": "Doe",

"link": "https://work.facebook.com/app_scoped_user_id/HASH",

"name": "John Doe",

"name_format": "{first} {last}",

"picture": {

"data": {

"height": 1016,

"is_silhouette": false,

"url": "https://platform-lookaside.fbsbx.com/platform/profilepic/?asid=581595189176358&height=1200&width=1200&ext=NUMBER&hash=HASH",

"width": 1016

}

}

}{

"user_id": "U01M7K2U93K",

"image_192": "https://secure.gravatar.com/avatar/186ddaf086f2b86827311ojk8a9453dc.jpg?s=192&d=https%3A%2F%2Fa.slack-edge.com%2Fdf10d%2Fimg%2Favatars%2Fava_0002-192.png",

"avatar_hash": "q126ddaf086f",

"status_emoji": "",

"first_name": "John",

"real_name_normalized": "John",

"display_name": "",

"skype": "",

"phone": "",

"fields": [],

"title": "",

"last_name": "",

"image_48": "https://secure.gravatar.com/avatar/186ddaf086f2b86827311ojk8a9453dc.jpg?s=48&d=https%3A%2F%2Fa.slack-edge.com%2Fdf10d%2Fimg%2Favatars%2Fava_0002-48.png",

"status_text": "",

"real_name": "John",

"email": "<mailto:[email protected]|[email protected]",

"status_expiration": 0,

"image_512": "https://secure.gravatar.com/avatar/186ddaf086f2b86827311ojk8a9453dc.jpg?s=512&d=https%3A%2F%2Fa.slack-edge.com%2Fdf10d%2Fimg%2Favatars%2Fava_0002-512.png",

"image_24": "https://secure.gravatar.com/avatar/186ddaf086f2b86827311ojk8a9453dc.jpg?s=24&d=https%3A%2F%2Fa.slack-edge.com%2Fdf10d%2Fimg%2Favatars%2Fava_0002-24.png",

"image_32": "https://secure.gravatar.com/avatar/186ddaf086f2b86827311ojk8a9453dc.jpg?s=32&d=https%3A%2F%2Fa.slack-edge.com%2Fdf10d%2Fimg%2Favatars%2Fava_0002-32.png",

"image_72": "https://secure.gravatar.com/avatar/186ddaf086f2b86827311ojk8a9453dc.jpg?s=72&d=https%3A%2F%2Fa.slack-edge.com%2Fdf10d%2Fimg%2Favatars%2Fava_0002-72.png",

"display_name_normalized": "",

"status_text_canonical": "",

"_channel": {

"type": "slack",

"name": "Slack channel",

"app_id": "A01NUE2CBHC"

}

}Send a broadcast on the requested channel (supported channels only) to the requested client. Broadcast requests are queued and usually sent within a few seconds. If a target is unavailable, no error is generated.

curl "https://clients.csml.dev/v1/api/broadcasts" \

-H 'content-type: application/json' \

-H 'accept: application/json' \

-H 'x-api-key: ${X-API-KEY}' \

-d $'{

"payload": {

"content": {

"flow_id": "myflow"

},

"content_type": "flow_trigger"

},

"client": {

"user_id": "some-user-id"

},

"metadata": {

"somekey": "somevalue"

}

}'

No broadcast can be sent to a user that has not already actively interacted with the chatbot.

A sample _metadata for an incoming event will be similar to the following object:

{

"id": "29:1s83dfDjC67Mst-8raeSEanxC9xENBhZpVcDA2pSIa0ItR3hhYa-xP-ttaSPKKYkSdC1LL7Eq9Q3a7jNoNcCdPQ",

"objectId": "87209afd-cad1-41e7-bdee-048c600f5859",

"name": "John Doe",

"givenName": "John",

"surname": "Doe",

"email": "[email protected]",

"userPrincipalName": "[email protected]",

"tenantId": "jn8si7dd-7e22-4e93-aa20-4d44f18627cf",

"userRole": "user",

"conversationId": "a:1KmhjQAB7zmrktvuoQv4EnRI_Hx6vH6a_JPoQvhdrVFTLf78tavBHtsUjst9iecdBybfiKbIQSTiMlm6wApO2o6R-EZSdAj8_Ll2BVPv5rZ9zZxPZmMTwMk59kIojSuDM",

"_channel": {

"type": "msteams",

"name": "John's channel"

}

}Question (buttons will be added in a new line under the question's title)

Image

Other components will be automatically reduced to simple text components.

Each call made to CSML Studio will have a number of metadata, based on what information is provided by Twilio. An example _metadata object would look like this:

{

"ToCountry": "FR",

"ToState": "",

"SmsMessageSid": "SMfaXXXX",

"NumMedia": "0",

"ToCity": "",

"FromZip": "",

"SmsSid": "SMfaXXXXX",

"FromState": "",

"SmsStatus": "received",

"FromCity": "",

"Body": "Hello",

"FromCountry": "FR",

"To": "+33644648719",

"ToZip": "",

"NumSegments": "1",

"MessageSid": "SMfaXXXX",

"AccountSid": "ACa6XXXX",

"From": "+336XXXXXXX",

"ApiVersion": "2010-04-01",

"_channel": {

"type": "twilio-sms",

"name": "XXXXXXXXXXXX"

}

}{

"request_id": "57d2d4ab-6994-4449-82bf-206619d9d063",

"client": {

"user_id": "some-user-id",

"bot_id": "bc5de819-e4c5-463e-9090-5648fac3a570",

"channel_id": "b1f74f8d-99b6-40b2-ad64-00ec364bae23"

},

"payload": {

"content_type": "flow_trigger",

"content": {

"flow_id": "myflow",

"close_flows": true

}

},

"metadata": {

"somekey": "somevalue"

}

}Apps only get 512MB of RAM by default. Any excessive resource usage will lead the app to stop its execution immediately.

If you are saving the output of your app to a do x = App(..) (or a remember), the data is subject to the usual memory size limitations. In general, if your app returns more than a few KB of data, ask yourself if you really need all that data that you most likely won't be able to display in a chatbot (text-based, so really light) or if you shouldn't strip it down directly in the app itself!

And many others!

your access token (which you can find at the bottom of your profile settings) and

Hit Save, and a dedicated inbox will be created for you!

We recommend you configure a "Signing off" canned response in Chatwoot to trigger the LIVECHAT_SESSION_END event under Settings > Canned Responses.

You can simply copy and paste the text below, or adjust to your liking - just make sure that the LIVECHAT_SESSION_END is at the end of the content.

Let us know if we can do anything else for you - we are here to help.

LIVECHAT_SESSION_ENDUsing livechat in your flows is very easy. You can find an example implementation to import in CSML Studio here on github.

To start a livechat session, the user needs to specifically request a livechat session with an available agent. This is achieved by getting the user to send the payload LIVECHAT_SESSION_START. When this happens, your CSML chatbot stops receiving events, and all messages are sent to the livechat backend of your choice.

To end a session, the user must send the payload LIVECHAT_SESSION_END . To do this, we recommend that your agent sends a "signing off" message containing that payload, which will be automatically transformed into an adequate button.

The session will also expire if no message is exchanged between the agent and the user within 60 minutes by default (or the timeout configured in the livechat settings panel). When that happens, an event is sent to your bot with the payload LIVECHAT_SESSION_EXPIRED.

This example flow explains in detail the lifecycle of a livechat request:

We have several livechat solution providers, we encourage to try them all to see what fits your use case best! There is usually a free version available to try their product.

Once you have configured the livechat integration, you can also set other parameters:

Livechat session timeout: this is the maximum amount of time a livechat session will remain active if no message is exchanged between the agent and the user. Any message sent from either side will reset that window. Default: 1hr.

Session end message and button label: to end a session, the agent should send the user a "LIVECHAT_SESSION_END" event, which will be transposed to a text and button for the user to click on, to confirm they do want to end the livechat session. If the user does not click the button or says anything else, the session continues. This field helps you configure that message.

To configure the webhook, you can either use a TwiML app, or directly set a webhook in the messaging configuration panel. Either way, you will need to set the HTTP POST webhook to https://clients.csml.dev/v1/twilio/sms/request, for example like so:

The last step is to provide CSML Studio with means to make sure that the requests that are sent to the Twilio Voice endpoint are indeed coming from Twilio. To do so, visit your Twilio Project Settings and copy your Account SID and Auth Token, found under API Credentials:

To setup your Twilio SMS channel in CSML Studio, you will simply need to visit the Channels section and create a new Twilio SMS channel. Then simply fill the required fields with the information you gathered earlier.

Once you click on Submit, your bot is ready to use!

In the next screen, grant the Project > Editor role to the service account:

The third step (Grant users access to this service account) is optional and can be skipped. Click on Done to create the service account.

Once the service account is created, you will need to generate a key. Select the service account, click on Add Key > Create New Key, select JSON as the Key type, and save the file.

In CSML Studio, under Channels > Connect a new channel, select the Google Chat channel. In the next screen, give your channel a name, a description and upload your service account credentials.

In the next screen, you can configure the bot's Welcome Flow, and copy the Chatbot Endpoint URL, which we will use in the next step.

To finalize the configuration, visit https://console.developers.google.com/apis/api/chat.googleapis.com/hangouts-chat (make sure that the selected project is still the same one as before) and fill the information as requested.

You can select Bot works in direct messages or Bot works in rooms... depending on the experience you want to give to users.

Under Bot URL, paste the Chatbot Endpoint URL from the previous step.

Click Save, and you are all set!

To find your bot in Google Chat, users have to navigate to the Bot catalog, which can be reached either by clicking the + icon next to the bot section of the left sidebar, or by searching for the name of the bot directly:

Upon activating the bot for the first time, the Welcome Message will be displayed:

In the next screen, you will be able to create a display name, which is also the default invocation for your action. Read more about how to pick the right display name here!

Finally, you will also need to retrieve your action project's ID, which you can easily copy from the URL:

In CSML Studio, create a new Google Assistant channel from the Channels page and fill in the requested fields. The name and description can differ from the name of the Action, they are only visible inside CSML Studio!

After you created the channel, download and install the gactions CLI utility from this page: https://developers.google.com/assistant/actions/actions-sdk/. This tool will let you "bind" your gactions and the CSML studio project, using the gactions.json package that you can download on the channel's configuration page:

You will need to execute the command on a terminal and follow the instructions to authenticate your requests with Google. Concretely, after you execute the command, you will need to click on the link that the command will prompt you to visit, login with your Google account, and copy and paste the given code:

After you have done this process, you can go test the bot in the Test panel:

Simple texts

Wait() and Typing()

Medias: Image(), Video(), Audio(), File()

Url()

Question() and QuickReply()

Markdown is partially supported (only bold, italic and strikethrough).

Whatsapp provides an easy way to generate QR codes that let users reach your chatbot easily. You can read more about it here: https://developers.facebook.com/docs/whatsapp/business-management-api/qr-codes

As a security measure, user access tokens expire after about 60 days. If your Whatsapp chatbot remains unused for a longer time, the token will be automatically expired and you will need to reconnect your app to restore it.

Whatsapp has a very limited set of interactive components. Buttons for example are textual only, and your users will have to type the actual text of the button

The mapping of Question() and Button() components to text is performed automatically. However you need to set the right title and accepts values in order to correctly match the user's input.

The title of a button must be between 1-20 characters long. You can however manually set a longer payload if needed:

say Button("Click here!", payload="MY_BUTTON_CLICK_HERE_PAYLOAD")

The Url() component does not support any alternative text or title. Only the given url will be shown as is.

For instance, the following code:

Has the following output:

You must upload videos in mp4 format. Videos hosted on platforms such as Youtube, Dailymotion or Vimeo will not display (use the Url() component).

You must upload audio files in mp3 format. Audio files hosted on platforms such as Spotify, Deezer or Soundcloud will not display (use the Url() component).

There is a hard limit of 25MB for all uploaded files (including image, video and audio files).

In a File() component, not all file types are supported. A list of supported file types could not be found, but it is safe to recommend using very common file types only.

The file upload process happens asynchronously on Whatsapp, so messages might show out of order if there is a large upload with not enough time left for its upload before sending the next message.

Carousel() and Card() components are not supported.

If you have strong requirements for data hosting or prefer hosting the CSML Engine on your own servers, you may want to use the On-Premise feature. By using this feature, you have full control over what version of CSML Engine is used at any given time (the Studio will always default to the latest available version otherwise), but you also have the responsibility of maintaining and scaling the engine according to your usage. We recommend that you only use this option if you really know what you are doing or if you have strong legal (i.e data privacy, compliance) requirements for doing so.

Designing a callbot is very different from designing a text-based chatbot. There are however some extra rules to make a successful callbot:

Keep your all your sections short. Nobody wants to listen to (and can remember) a huge block of text!

This integration supports both speech and DTMF (keypad) inputs. When you can, let the caller use the number keypad on their phone, as it has much better success rates than speech to text!

It is impossible/difficult to know in advance what language a user will speak, so we recommend using a different phone number/TwiML app for each language that you intend to serve with this chatbot.

All conversations start at the welcome_flow define in the channel. Callbot conversations never continue where they left off.

To hung up on a user, simply reach a goto end.

By default, there is a timeout of 5s on every hold. If the user does or says nothing, the conversation stops.

As you can expect, not all CSML components work well with a callbot, as there is no support for any visual component. However you can use all of the following components:

Text

Question

Audio

Typing

Callbots on CSML Studio also support an additional custom component, CallForwarding. This allows redirecting the user to a different phone number. This is very useful in a triage type of chatbots: when the request is qualified enough, you can simply redirect the user to the right person or service!

Each call made to CSML Studio will have a number of metadata, based on what information is provided by Twilio. An example _metadata object would look like this:

For now, you can not create multilingual Twilio callbots as the language is set in the channels settings. However, this feature can be added upon request, so let us know if you need it!

CSML Studio comes with a CLI (command-line interface) tool that helps you develop your chatbot locally before deploying it. With the CSML Studio CLI, you can update your flows using your favorite code editor on your local machine and publish it on CSML Studio. For larger projects, we recommend using the CSML Studio CLI over the online interface as it makes it easier to manage many flows.

To setup CSML Studio CLI, simply install the tool with npm install -g @csml/studio-cli (requires nodejs 12+). Then, using your bot's API keys (see the Studio API section to learn more about creating API keys), you can now run csml-studio init -k MYKEY -s MYSECRET -p path/to/project.

This command will setup a local development environment where you will be able to manage your chatbot entirely from the comfort of your machine!

Broadcasting to a large number of targets can be done really easily with the CSML Studio CLI tool. Indeed, you simply need to prepare a file with all your targets:

Then, simply run the following command, which will send each of these targets a broadcast (message initiated from the bot) on the configured channel (based on the given API keys):

How to configure an Amazon Alexa skill with CSML Studio

To create an Amazon Alexa skill, you will first need to create a free developer account on https://developer.amazon.com/alexa, then visit the Alexa Skills Kit (ASK) console and click on Create Skill.

In the next section, give your skill a name and select the default language. This can be changed later! Under Choose a model to add to your skill, select Custom, and under Choose a method to host your skill's backend resources, select Provision your own, then click on Create Skill at the top.

In the next screen, select Start from scratch. Alexa will then build your skill.

Once the skill is built, you will need to connect it with CSML Studio. This step is actually very easy. First, find and copy the Skill ID of your skill:

Then, in CSML Studio, create a new Alexa channel for your bot under Channels > Amazon Alexa and set the name and ID of your skill. You will also need to copy the HTTPS Endpoint URL to configure the Skill in the ASK console later. You can then save for now!

To finalize the configuration, in the ASK Console, visit your skill's Build tab > Endpoint. By default, AWS Lambda ARN is selected: select HTTPS instead and under Default Region, paste your HTTPS Endpoint URL from CSML Studio and select the SSL Certificate option "My development endpoint is a sub-domain of a domain that has a wildcard certificate from a certificate authority", then click on Save Endpoints.

Your skill is now correctly configured with CSML Studio!

Step by step guide to install a Slack channel

The Slack integration provides you with a Manifest that allows you to setup most of the heavy Slack App configuration in seconds. To get started, simply click on the link provided here:

Simply follow the on-screen instructions until the app is installed to your workspace. You will be required to allow the app to perform the necessary actions - you must be a workspace admin to do that.

You will still need to perform a few actions to correctly setup your app. First, visit the App Manifest menu. It may have an orange warning box at the top: in that case, click the "Click here to verify" link to make that warning disappear. Another way to perform this verification if needed is by visiting Event Subscriptions and performing the same operation.

Next, visit the OAuth & Permissions menu, copy the Bot User Token and save it for later.

Next, select App Home in the left-side menu. Enter a Display Name and a Default Name for your bot and select Always Show My Bot as Online.

At the bottom of the same App Home page, under Show Tabs, make sure the Messages Tab toggle is on and the Allow users to send Slash commands and messages from the messages tab checkbox is checked.

Next, visit Basic Information from the left-side menu, copy the App ID, Client ID, Client Secret and Signing Secret under App Credentials and save them for later.

On the same page, you can also customize the appearance of your chatbot in Slack (image, colors...).

Finally, back in CSML Studio, paste the credentials you retrieved earlier: Bot User Token, App ID, Client ID, Client Secret, Signing Secret, then click on Submit.

You should now be able to see your app in the left sidebar of your Slack workspace, under Apps. This is where you can chat with your slack bot!

How to configure a callbot on CSML Studio with Twilio Voice

To get started, you will need to create a Twilio account and purchase a phone number in the country of your choice. . The only point of attention is that you must make sure that the number you purchase has Voice capabilities. Please keep in mind that depending on your country of origin, the requirements may differ, and Twilio may ask you for business verification. Keep track of this phone number (including the country code) as you are going to need to add it later to CSML Studio!

TwiML is Twilio's own markup language for connecting with their programmable interfaces. You will need to create a new app by and clicking on Create a new app, then fill the Voice Request URL and provide with this URL: .

Once you have created this app, find its Application SID by clicking on its name again on the list of TwiML apps, and keep this information for later.

You will then need to link this Application with the phone number you created earlier. To do so, visit the , and click on your number to configure it. Under Voice & Fax, select Accept incoming voice calls, configure with TwiML App and pick the TwiML App we provided earlier.

The last step is to provide CSML Studio with means to make sure that the requests that are sent to the Twilio Voice endpoint are indeed coming from Twilio. To do so, and copy your Account SID and Auth Token, found under API Credentials:

To setup your Twilio Callbot in CSML Studio, you will simply need to visit the Channels section and create a new Twilio (Voice) channel. Then simply fill the required fields with the information you gathered earlier.

About the Language field: as Twilio provides Speech-To-Text and Text-To-Speech capabilities, it must know in advance in what language the user is supposed to speak. . You must specify the full ISO locale code (i.e en-US, fr-FR...).

Once you click on Submit, your bot is ready to use!

Using the CSML Studio, you can connect and deploy your chatbot on a number of communication channels. Each channel has its own set of limitations and features, so when developing a chatbot you must always keep in mind how your end users will be using your chatbot. Vocal chatbots are not consumed the same way as textual chatbot, so it sometimes makes sense to design an entirely separate user experience for channel.

CSML can not compensate for limitations in each third-party channel. Instead, it provides a universal way to describe conversational components and an adaptor will try to match with what is available on that channel.

In most cases, CSML will try to find a sensible default behavior for each component on each channel. For instance, some channels can not display actual buttons so they will be displayed as plain text: in that case, a good practice is to place buttons in an ordered list and accept the buttons number as a trigger for that button.

In most cases, CSML offers to send a raw object to the channel adapter. For example, whilst CSML does not (yet) cover Airline Templates for Messenger, you can always send a raw object if you need it:

Most channels will provide a similar way of accessing native behavior for situations where CSML Components are not sufficient.

If you plan to use the same chatbot on multiple channels at the same time, there may be times where you need your chatbot to have a different behavior based on the type of channel: changing a text, displaying a static image instead of a video, altering the buttons...

Instagram Chatbots are quite similar to Messenger Chatbots, but there are quite a few differences. Here is everything you can do with Instagram Chatbots!

Messenger channel supports many CSML components: Text, Typing, Wait, Image, Question, Button, Card, Audio, Video, Url, File.

Note that the Typing component is converted to a simpler Wait component, and Carousel components are entirely unavailable.

Also, all types of Buttons do not display at all on the web version of Instagram (but a vast majority of Instagram users are on the mobile app).

Instagram supports , with the same limitations as with Messenger. Please refer to the for more information!

Failing to respect this rule may get your chatbot and/or page banned from Facebook or Instagram!

In general, all Instagram limitations apply. For example, texts can not be larger than 2000 UTF-8 characters, and attachment sizes (Videos, Audio, Files) can not be larger than 25MB.

Regular buttons are limited to 13 per component. The length of the button title should also never be longer than 20 characters.

You can also refer to the for relevant information on other Facebook limitations.

A sample _metadata for an incoming event will be similar to the following object:

By default, the webapp channel does not include any specific context about the conversation (see the _metadata object documentation). In some cases, it can be useful to load the webapp (or chatbox) with pre-existing metadata.

Some common scenarios:

the bot is loaded in a website where the user is already known: in that case, we may be interested in injecting the user's context (name, email...) inside the conversation.

the user is authentified on the parent website: in that case, we may want to inject an authentication token into the user's conversation for subsequent calls.

the same bot is used across several websites and we want to know which website the user is currently visiting: in that case we can inject a unique website identifier into the conversation.

The injected data is available in the _metadata global variable, available in every flow.

The code of the example above is:

To add custom metadata in a webapp, simply add the encoded (with ) JSON string of the metadata you want to inject to the query parameters of the webapp's URL. The URL of the webapp in the example above would be:

You can change the appearance of the webapp by visiting the Appearance tab of the webapp configuration panel. This lets you have even more control over the final experience for your end users, and match your own branding even better!

Many elements are configurable:

Chatbot avatar, header card color and background image

User bubble colors

Hiding the CSML branding

Disabling the speech input

There are two prerequisites for installing a Whatsapp bot in the CSML Studio.

you MUST have a verified Meta Business account

you MUST have a Meta Developer account

And more generally, you must be able to follow this guide to setup a Meta app correctly: .

In your bot in CSML Studio, go to Channels > Connect a new channel, then select Whatsapp.

In the next screen, enter a name and description for your channel (it is purely informational and can be changed later) and add the required credentials.

The App ID and App Secret can be found in the app's Basic Settings page:

The User Access Token, Phone Number ID (and not the actual phone number!) and WhatsApp Business Account ID can be foud under Whatsapp > Getting Started. You can start with one of the Test numbers provided by Facebook, but in production you will want to change that to your own phone number once it is properly configured.

Then, click Save to setup the channel. In the following page, you will also receive your Webhook configuration parameters:

Use these parameters in the Whatsapp > Configuration page under Callback URL and Verify token. Make sure to also subscribe to the messages Webhook field.

The final step before you can deploy this app to production is to (if not already done), and obtain .

All API calls are authenticated with the combination of two separate keys: API Key and API Secret.

The API Key is the public key and can be put in clear client-side (your website, your app...). The API Secret should never be shared publicly and should only be set on your server.

There are two different modes of authentication for Studio endpoints: Private or Public.

CSML Studio also offers a way to preprocess every incoming event of certain types, in order to add some metadata or transform it. Here are some use cases:

transcribe audio inputs

translate incoming text

save uploaded files to a dedicated file storage

The Messenger channel on CSML Studio offers some configuration options. More will be added over time!

When a user starts using your bot, the first thing they will see is a Get Started button (the text will be automatically translated in their own configured language and can not be changed). In the background, a "GET_STARTED" payload is sent to the bot.

This setting helps you configure which flow will be triggered by this specific payload. By default it will be the bot's default flow, but you might want to have a different behavior for what happens when users connect to your bot for the very first time (or when they have deleted the conversation before and want to start anew).

start:

do user = App("getRandomUser")

say "The random user's name is: {{user.name.first}} {{user.name.last}}"

goto end start:

say "Hi {{_metadata.firstname}}"

say QuickReply(

"Your email address is: {{_metadata.email}}. Do you confirm?",

buttons=[Button("yes"), Button("no")]

)

hold{ASSISTANT_URL}?metadata=%7B%22firstname%22%3A%22Jane%22%2C%22email%22%3A%22jane.doe%40company.com%22%7Dstart:

say Url("https://www.google.com", text="Go to google")

goto end

Public Endpoints (consisting of Chat and Broadcasts API endpoints) can be authentified by providing only the value of the Api Key in the X-Api-Key header. They can be accessed from the frontend, i.e by creating your own chat interface integrated in your app or website.

When calling Chat and Broadcasts API endpoints, you only need to set the X-Api-Key header as follows:

Private Endpoints are endpoints that should only be accessible to the developer of the chabot, allowing to modify the chatbot in its entirety or access conversation data (Bot and Conversations API endpoints). To authentify your Private Endpoints requests you need to set the X-Api-Key and X-Api-Signature headers. The CSML Studio Private APIs use HMAC authentication to ensure that all calls are properly signed with both the API Key and API Secret, while preventing man-in-the-middle attacks and replay attacks

The X-Api-Signature process MUST NEVER be done on the client side, as this would let any person in control of your API Secret also control your bot (make changes, get user data...). Obviously, never share your API Secret with anyone in clear text.

In the X-Api-Key header, concatenate the API Key and the current unix timestamp (in seconds), separated by the | character.

In the X-Api-Signature header, provide the hexadecimal sha-256 hash of the X-Api-Key header value, signed with your API Secret.

The CSML server will be able to validate that both the API Key and the API Secret used are valid, without requiring you to provide the API Secret in clear text. Replay attacks are prevented by invalidating all calls a few minutes after the timestamp provided in the X-Api-Key header.

To authenticate your Private calls, you need to calculate the signature based on the Api Key and the Api Secret that you can find in CSML Studio, as well as the current timestamp. Here are implementation examples of the X-Api-Key and X-Api-Signature headers in Node.js and Python.

NB: this calculation should never be performed client-side!

Then, perform an authenticated call as follows:

csml-studio broadcast -i myfile.csv --flow_id myBroadcastFlow -k MYKEY -s MYSECRET{

"_channel": {

"name": "test_myinstapage",

"page_id": "866763783487618",

"type": "instagram"

},

"name": "John Doe"

"id": "1106013791815671",

"profile_pic":"https://example.com/XXXX"

}const crypto = require('crypto');

const UNIX_TIMESTAMP = Math.floor(Date.now() / 1000);

const XApiKey = `${API_KEY}|${UNIX_TIMESTAMP}`;

const signature = crypto.createHmac('sha256', secret)

.update(XApiKey, 'utf-8')

.digest('hex');

const XApiSignature = `sha256=${signature}`;import time

import hmac

import hashlib

timestamp = str(int(time.time()))

x_api_key = ${API_KEY} + "|" + timestamp

signature = hmac.new(

str.encode(${API_SECRET}),

x_api_key.encode('utf8'),

digestmod=hashlib.sha256

).hexdigest()

x_api_signature = "sha256=" + signaturecurl -X "POST" "https://API_ENDPOINT" \

-H 'content-type: application/json' \

-H 'X-Api-Key: YOUR_API_KEY' \

-d $'YOUR_REQUEST_BODY'curl -X "POST" "https://API_ENDPOINT" \

-H 'content-type: application/json' \

-H 'X-Api-Key: YOUR_API_KEY_AND_TIMESTAMP' \

-H 'X-Api-Signature: YOUR_CALCULATED_API_SIGNATURE' \

-d $'YOUR_REQUEST_BODY'Wait

perform OCR on each uploaded image

run a custom natural language processing library on text inputs (for that use case, you may also want to look at Natural Language Processing)

Preprocessors are simply external apps that are run on every incoming event (of the given type) and return a valid CSML event. The only specificity is that the parameters it must accept and return are standard CSML event payloads. Preprocessors that fail to accept or return such payloads are simply ignored and your incoming event will not be transformed.

Let's create a simple Text preprocessor that will return a picture of a hot dog if the user had the word "hot dog" in their input, or change the text to "Not hot dog" otherwise.

To add a preprocessor, you first need to add a preprocessor-compatible app under the apps section (see here). For this tutorial, let's just use the Quick Mode, but of course you can use Complete Mode as well.

This is the code we are going to use:

It is really simple: if the user text input contains the words "hot dog" anywhere, then return an image of a hotdog. Otherwise, change the text to "Not hot dog". In both cases, the original, untransformed event is still accessible with event._original_event in you CSML code, if needed.

Next, go to Apps > Add Custom App and under Quick Mode, simply copy and paste it, select a name for your app (for instance, hotdog seems like a fitting name), and click save.

Then, head to the Preprocessing page. We only want to preprocess text events, but of course, we could do a similar app for other types of events.

Now, back to the CSML Editor, you can do something like the following:

start:

if (event.get_type() == "image") say Image(event)

else say event

goto endTo configure a welcome flow, simply select it in the dropdown list the flow you want to run when the user clicks on the Get Started button.

You can use Referral (or Ref Parameters) to directly send users to various sections of your chatbot instead of the standard Welcome Flow. There are two scenarios:

If the user has never used your bot before (or has deleted their conversation), they will be shown the default Get Started button but when they click on it, they will reach the target flow instead of the welcome flow

If the user has already used the bot, they will directly go to the defined flow, in place of where they were before.

If a user uses a link with a Ref parameter that is not configured properly, the regular behaviour will persist (the Get Started button will redirect to the welcome flow, and existing conversations will simply continue).

There are two ways to use Ref parameters to redirect to flows. You can either configure them in the channel's settings page by adding connections between custom Ref parameters and a target flow:

Or you can use the following special syntax:

The main advantage of the second syntax is that you can generate the links on the go; however, the first method is more flexible as you can easily change where a link is redirected even after it is created, and the content of the Ref parameter is entirely customizable.

Messenger makes it possible to add a persistent menu to your bots.

We offer a simple way to set precisely the persistent menu you want, by letting you add a JSON-formatted payload to set as the bot's persistent menu. Any valid persistent menu configuration is accepted.

This is not really a configuration option, but know that the _metadata object inside your flows will contain some info about your user: their name, first_name, and last_name will be accessible in the conversation's metadata, unless they are not correctly set in their profile.

There is also an id field, however this is not the user's real ID but is called an app-scoped user ID. It can not be related back to the user's actual Facebook ID.

do channel_type = _metadata._channel.type

// whatsapp can not display clickable buttons

// so we must provide a number to select instead

if (channel_type == "whatsapp-messagebird") say Button("1 - Pick me!")

else if (channel_type == "webapp") say Button("Pick me!")

The Assistant channel also comes with a configurable website plugin (called the Widget) that can be added to any website by adding a single line in your source code. The Widget will then appear as a small bubble in the bottom-right corner of your site for every visitor.

Simply add the following line of code in your source code, just before the closing </body> tag (replace it with the actual line that you can find in your Webapp configuration panel):

Once this is done, a chat bubble will appear as follows on every page where that code is included.

The Widget lets you configure a custom popup message to help you engage more actively with your customers. You can even have default greeting messages depending on the page where the widget is loaded! Here is how it works:

Greetings are matched with their page based on the URI. The first message that matches the URI is the one that will be displayed. We support wildcards * and ** to make it more versatile!

To display a message on all pages, use /** as the URI.

If you want to target one specific page, use its URI, i.e /about-us/the-team

Several configurations are available as to maximize compatibility across browsers.

By default, the widget will be displayed on the right side of the screen. To display the widget on the left instead, simply add data-position="left".

To add custom metadata in a widget (), you need to add a data-metadata attribute to the widget initialization script tag that contains the encoded (with ) JSON string of the metadata you want to inject.

For example: data-metadata="%7B%22email%22%3A%22jane.doe%40company.com%22%7D"

You can configure a custom image to be used as the widget logo by setting this parameter to the URL of your image. You can use a transparent png or a svg to achieve this effect below, or directly use a square image (it will be rounded automatically) without a transparent background to use as the logo.

For example: data-logo-url="https://cdn.clevy.io/clevy-logo-square-white.png"

These two parameters let you change the fill color of the default icons, as well as the background for the launcher button.

Any valid CSS value for these elements is accepted. The default values are:

Force the widget to open as soon as the script is loaded. Not recommended as it might be a bit agressive for the visitors of the page, but may be useful in some cases!

Set the Assistant's to trigger a specific flow or step.

The settings panel has a few options to allow you to configure the Assistant. Each Assistant must have a name, and you can also add an optional description and logo.

When a user arrives on the page, the chatbot welcomes them with a flow. It can be any flow, but by default it is the Default flow.

If you do not want a welcome interaction at all, create an empty flow and use that as the Welcome flow!

The Assistant channels lets you add shortcuts to either flows or external websites:

This will show as a list of searchable shortcuts in the sidebar in full-screen view, or at the top of the input bar in mobile/widget view:

The autocomplete feature helps your chatbot provide a list of up to 5 items that are contextualized to what the user is currently typing. Think of it as quickly hitting one of the first google search results!

To configure Autocomplete, you need to have a backend endpoint that will handle the requests and respond accordingly. You can configure this endpoint in the Assistant's configuration panel:

The request that will be performed by the Assistant will be as follows:

Your endpoint must return results within 5 seconds as an application/json content-type, formatted as an array of maximum 5 results containing a title, optional highlighting matches, and the corresponding payload.

When a user selects a result, it will behave as a button click where the text is displayed and the payload is sent to the chatbot.

If an autocomplete endpoint is set, it will be enabled by default. However, it can be disabled or reenabled dynamically in your CSML script:

How to setup a Microsoft Teams channel in CSML Studio

The installation process of a Microsoft Teams chatbot has many steps. You will also need to be able to create an app and bot on your Microsoft Teams and Azure tenants. Once

To get started, visit Channels > Connect new channel > Microsoft Teams (beta).

Retrieve your Messaging endpoint from the Studio configuration page:

Visit to create a new bot on Bot Framework. You may be asked to login to your developer account. On the next page, fill in a logo, name, description and bot handle for your bot, as well as the Messaging endpoint from the previous step. Leav Enable Streaming Endpoint unchecked. Take note of the bot handle for later.

Next, select Create Microsoft App ID and password.

You will be taken to a new page on Azure to register a new app (or list existing apps). Click on New registration.

On the next page, fill in a Name, select the Accounts in any organizational directory - Multitenant option (the reason is that you will be using the Microsoft Bot Framework tenant for your bot, which will require access to the app as well).

You can leave the Redirect URI fields empty, then click Register.

On the next page, create a New client secret, give it a name and a validity date (if you select anything other than Never, you will need to come back occasionally and get a new secret), submit, then take note of the given secret (you will never be able to see it again).

Now, go to Overview and take note of the App ID for later. After that, you can close this window.

Back to the Bot Framework configuration window, paste the Microsoft App ID you just created where required, then scroll to the bottom of the page, check the "I Agree" box and click Register.

After registering your bot, you need to add Microsoft Teams as a channel. To do so, simply click on the Configure Microsoft Teams channel icon.

On the next page, simply click Save without doing anything else, check the "I Agree" box, and submit.

You can now close this window as well and go back to the CSML Studio.

Back to the CSML Studio channel creation page, fill in the App ID, App secret and Bot handle, then submit.

In the next page, you can generate and download a Manifest for your Microsoft Teams chatbot. A manifest is a configuration file that you will be able to upload on Microsoft Teams in order to deploy your chatbot to your team. It contains all the information required for Microsoft to correctly install and display your bot to every user.

Upon saving, a manifest.zip file will be downloaded to your computer. Save it somewhere for the next (and final) installation step.

On Microsoft Teams (web or desktop), find the Apps panel, then select Upload custom app > Upload for MY_TEAM (obviously, replace MY_TEAM with the name of your own team).

Then, click on the App you just uploaded, then select Add.

Voilà! Your bot is now available to all users in your team:

CSML Studio also comes with a number of tools helping you perform day-to-day tasks such as generating QR codes, manipulating dates and times, or scheduling conversation events in the future.

The scheduler is a good way to plan events to happen at a later time. There are 2 types of scheduled events:

webhook, when you want a certain URL to be notified at a later time

In order to connect your users to various services, you may need to authenticate users. There are several ways to do this, depending on the authentication methods that are available in the 3rd-party service.

CSML chatbots run in a secure server, which means that you can load credentials for the service you want to access in your chatbot's or either dynamically or statically accessing variables inside a flow. If a single set of credentials lets all your users access the service, it means that you probably created a dedicated service account, an account specifically made for the chatbot to access your service with credentials that all users will use to perform the action they want to do.

This method is usually simple to implement (generate once and for all a set of credentials and load it in the chatbot) but has a major drawback: the same credentials are used for all of your users, which are now only differentiated between them by other means (for example, if the ID key is their email address, you could send them an email with a code to that email address to make sure that they are who they claim they are).

// This will redirect the call to the provided number

say CallForwarding("+33123456789"){

"AccountSid": "ACa63XXXXXXXXXXa94",

"ApiVersion": "2010-04-01",

"ApplicationSid": "AP95bXXXXXXXXXX17a",

"CallSid": "CA13bXXXXXXXXXXc6b",

"CallStatus": "ringing",

"Called": "+336XXXXXXXXX",

"CalledCity": "",

"CalledCountry": "FR",

"CalledState": "",

"CalledZip": "",

"Caller": "+336XXXXXXXX",

"CallerCity": "",

"CallerCountry": "FR",

"CallerState": "",

"CallerZip": "",

"Direction": "inbound",

"From": "+336XXXXXXXXX",

"FromCity": "",

"FromCountry": "FR",

"FromState": "",

"FromZip": "",

"To": "+336XXXXXXXXX",

"ToCity": "",

"ToCountry": "FR",

"ToState": "",

"ToZip": "",

"_channel": {

"type": "twilio-voice",

"name": "XXXXXXXXXXXX"

}

}exports.handler = async (event) => {

const text = event.content.text;